-

North and Central America

Can't find your location? Visit our global site in English.

-

South America

Can't find your location? Visit our global site in English.

- Asia

-

Oceania

Can't find your location? Visit our global site in English.

-

Europe

- Austria - Deutsch

- Belgium - English Français

- Czech Republic - Česky

- Finland - Suomalainen

- France - Français

- Germany - Deutsch

- Italy - Italiano

- Netherlands - Nederlands

- Poland - Polski

- Portugal - Português

- Romania - Română

- Spain - Español

- Switzerland - Deutsch Français Italiano

- Sweden - Svenska

- Turkey - Türkçe

- United Kingdom - English

- Kazakhstan - Русский

- Africa and Middle East

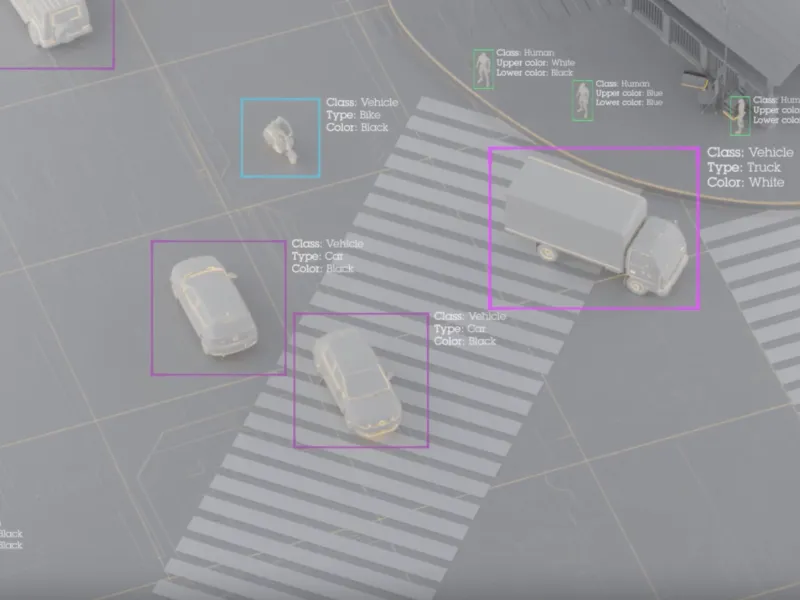

Whether you're building for the edge, cloud, or hybrid environments, AXIS Scene Metadata lays the foundation for faster development, simplified scaling and real-time automation by delivering accessible, real-time analytics metadata at the edge, that is organized, labeled and standardized for easy integration.

We have compiled a summary of the latest updates to AXIS Scene Metadata for you to build upon this source of structured information.

Version 1.0 of the Analytics Data Format

The Analytics Data Format (ADF) is a collection of schemas and definitions used to represent scene metadata in a structured way. Initially released as a beta, version 1.0 is now available in AXIS OS 12.8 and recommended for use in production environments.

The beta versions will remain available until AXIS OS 13.0 to allow for migration to the stable version. Please refer to Axis developer documentation for a detailed change log and additional resources such as schemas and classification types.

We will also be introducing powerful data processing and filtering capabilities in upcoming AXIS OS releases that enable users to easily modify the contents of the generated metadata according to specific needs. If you are a partner we encourage you to contact your Axis TIP representative and visit our AXIS OS beta releases, should you be interested in gaining early access to this functionality and providing direct feedback to the development of the feature.

New data source for object snapshots

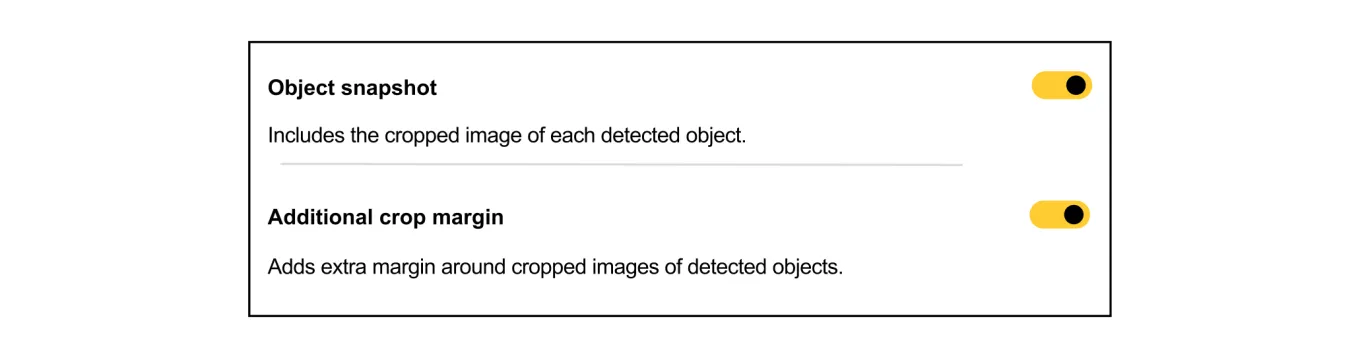

The data source “object snapshots” which delivers cropped images of detected objects based on the object snapshot feature has been added in AXIS OS 12.8, complementing the already existing “frame-by-frame” and “consolidated” topics to address different use-cases.

Each message contains one snapshot of the detected object according to the schema specified in the Axis developer documentation. Multiple snapshots may be sent for each object, if better alternatives are found during the object’s track lifetime. Please note that this functionality needs to be enabled.

Dewarped views on ARTPEC-8 fisheye cameras

It is now possible to obtain scene metadata for detected objects that are visible in the dewarped views (Panorama, View Area 1-4, Corner Left and Corner Right) through the available data sources, providing wide-area coverage and multiple uses with just one camera.

In these views, the bounding box coordinates of each detected object are transformed to align with the respective video view. Please note that, due to the non-linear transformation, object bounding boxes may become larger in the dewarped views.